In this internship, I explored teaching how Artifical Intelegence (AI) creates images, specifically looking at Stable Diffusion. I did this with CalPoly Humboldt's makerspace and library. I created both an interactive webpage for people to try out Stable Diffusion, and a presentation to teach people about how it creates images.

In this internship, I explored teaching how Artifical Intelegence (AI) creates images, specifically looking at Stable Diffusion. I did this with CalPoly Humboldt's makerspace and library. I created both an interactive webpage for people to try out Stable Diffusion, and a presentation to teach people about how it creates images.

Initial Goals

The overall goal of the project was to create an interactive learning tool for exploring AI. It could consist of an interactive app that allows users to try AI, learn about how it works, and explore ways to use AI in their academic work and professional development. And there are two phases to the project:

- Determine how to create an interface for using AI to create text and/or visual output. This could be in the form of a webpage or a physical digital display in the library.

- Create interactive elements that help to show how AI generates the output -- a tutorial of sorts that educates users on general AI concepts and architecture. This could be in the form of a presentation.

Furthermore, a longer term goal (for staff, not nessesarally for me to execute) is that this interactive tool can be used in conjunction with other campus resources to create support for students learning how to use AI without violating the academic dishonesty policy and how they can use it actively as a creative tool (rather than as a passive user generating content).

A note on ambiguity

The goal of the project was intentionally left a little ambiguitous to allow for flexibilty. Namely...

- The first phase only required that I determine how to create an interface. As long as I was doing research into how that could be done (so that someone else could then execute it), I was meeting the goal.

- Also in the first phase, I could work on AI that creates "text and/or visual output". I was aiming for image generation because it looks cool. However, if that cost to much to run, or was otherwise not feasible, that language allowed me to pivot to text generation.

- And lastly, it was broken into phases. That way I could focus on one thing at a time, and if I ran out of time in the second phase I could still have a deliverable.

Time management

I aimed to spend about 25 Hours total on the project. That number is based on the stipend of $500 I was given for this project. At $20/hour, that is 25 hours. Going from there, I divided that time as follows:

-

2 Research

- How Stable Diffusion works in detail, with all facets

- How people usually teach it (diagrams & other materials)

-

2 Implementation research

- IF doing interactive: how to call API to get images

- IF doing a webpage: finding visual design examples of page

- IF doing a display (also requires webpage): learning how to display webpage with display (keyword: kiosk).

-

4 Build functioning minimum viable product (MVP)

- IF doing webpage, calling API

- IF doing take-home (emailing their generated image to them), sending email from browser page

- Laying out rough teaching outline and path

-

2 Designing UI for page

-

7 Adding UI to webpage

-

7 Design teaching diagrams/specific-materials for presentation

-

1 Publish

- IF doing a webpage, should be to figure out how/where it's going earlier in process

- IF doing a display, putting webpage on display

Interactive Webpage

Find the interactive webpage here. Or try it embeded below:

Setting up the webpage

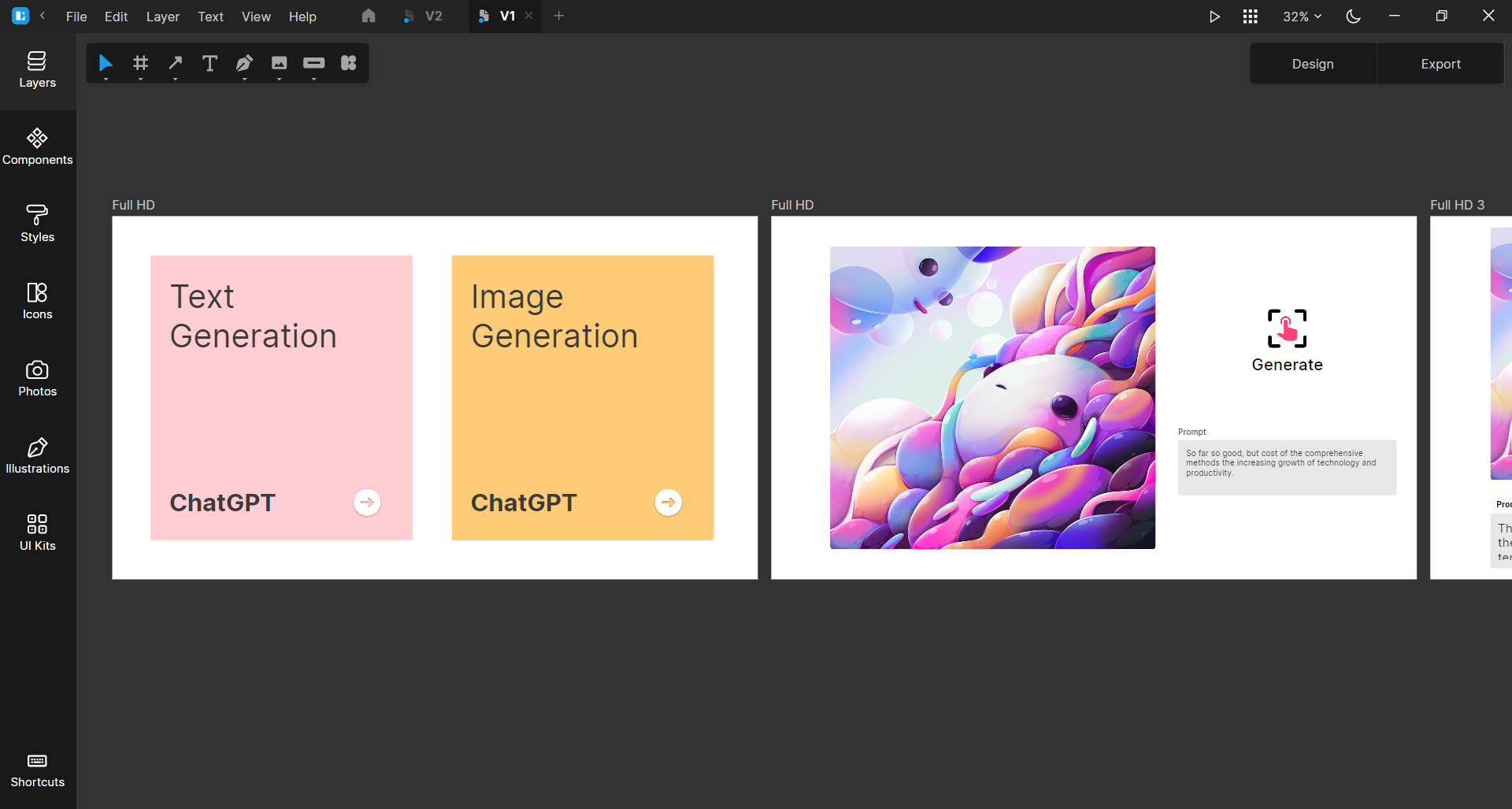

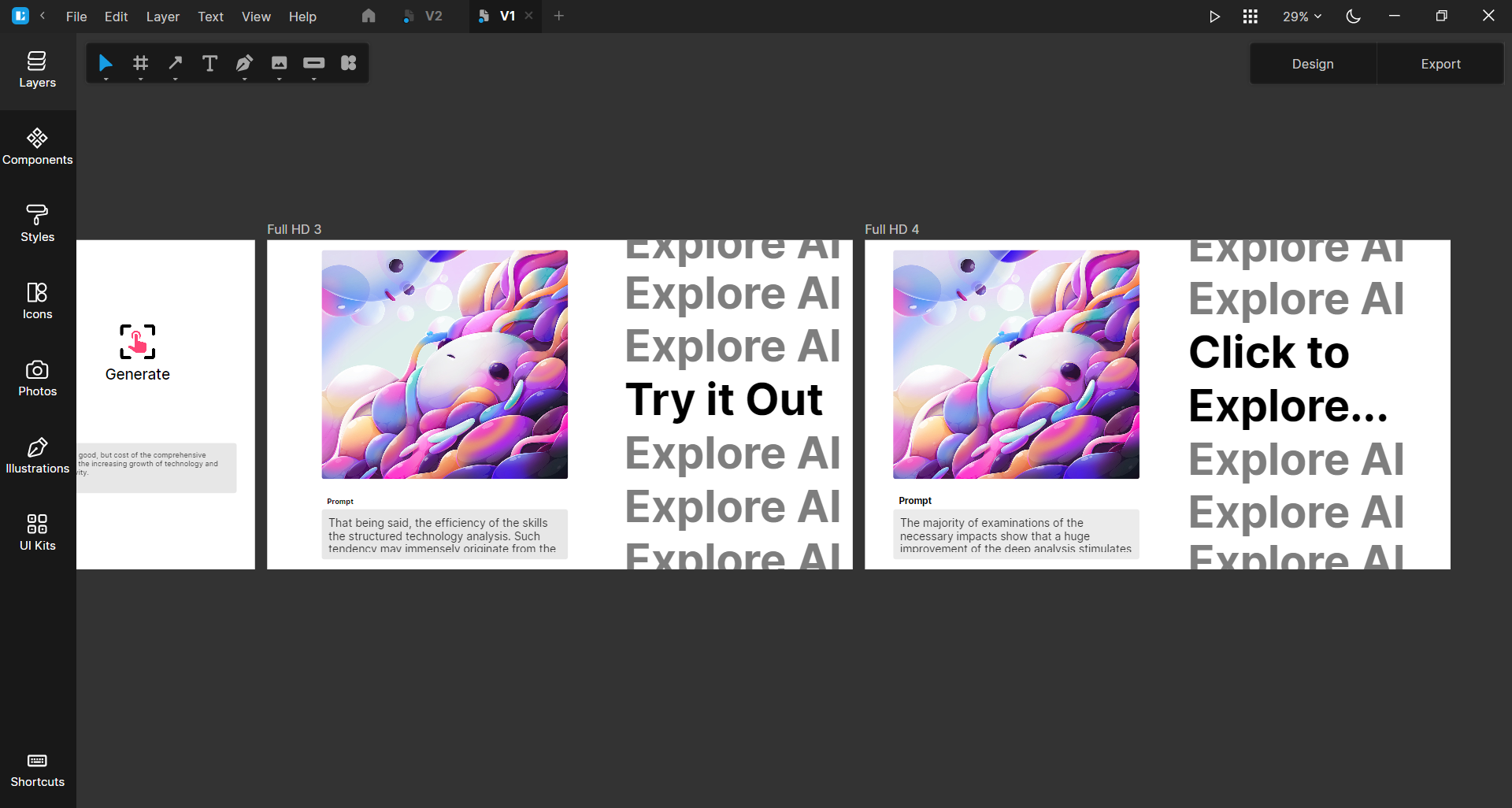

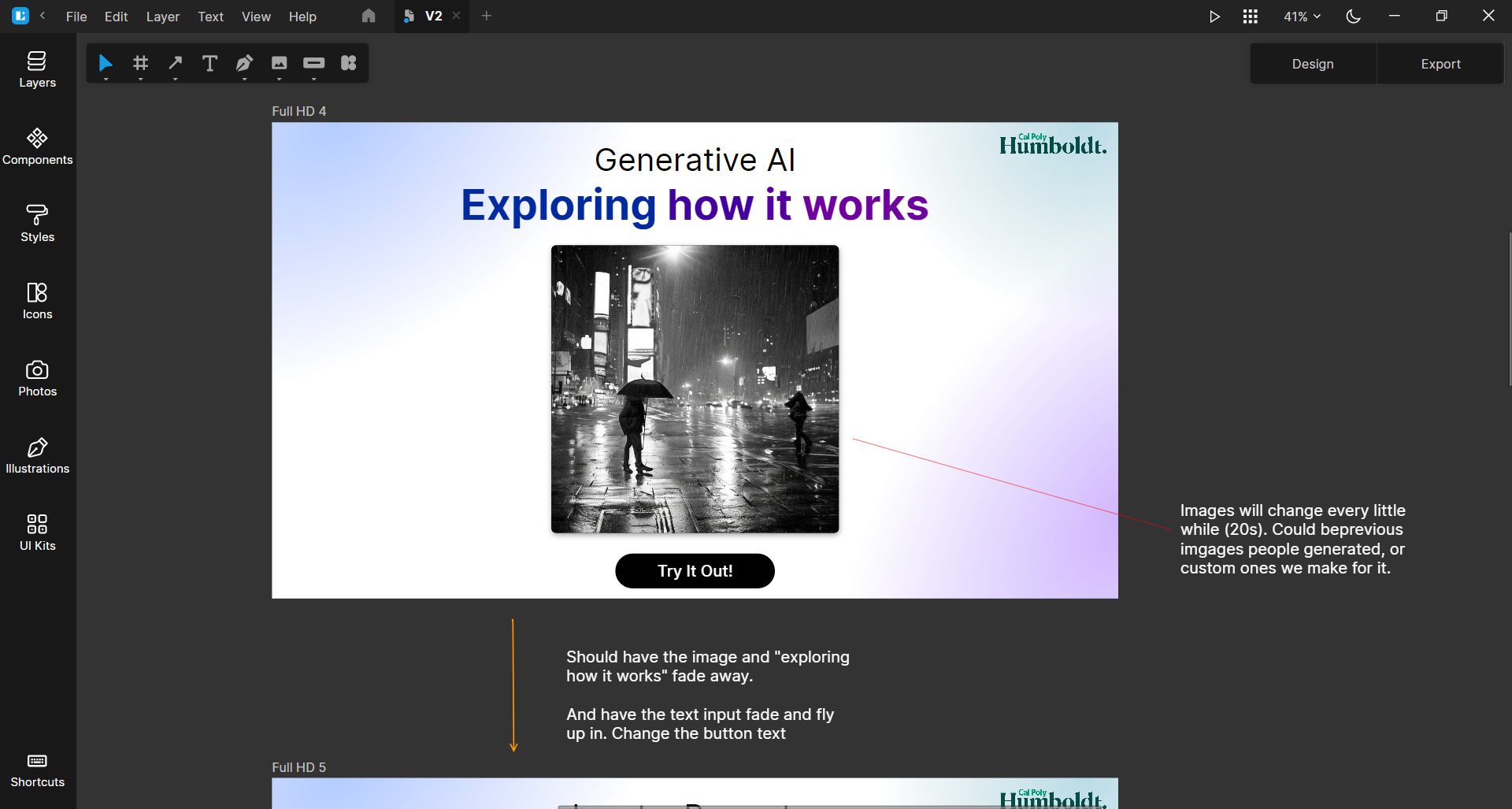

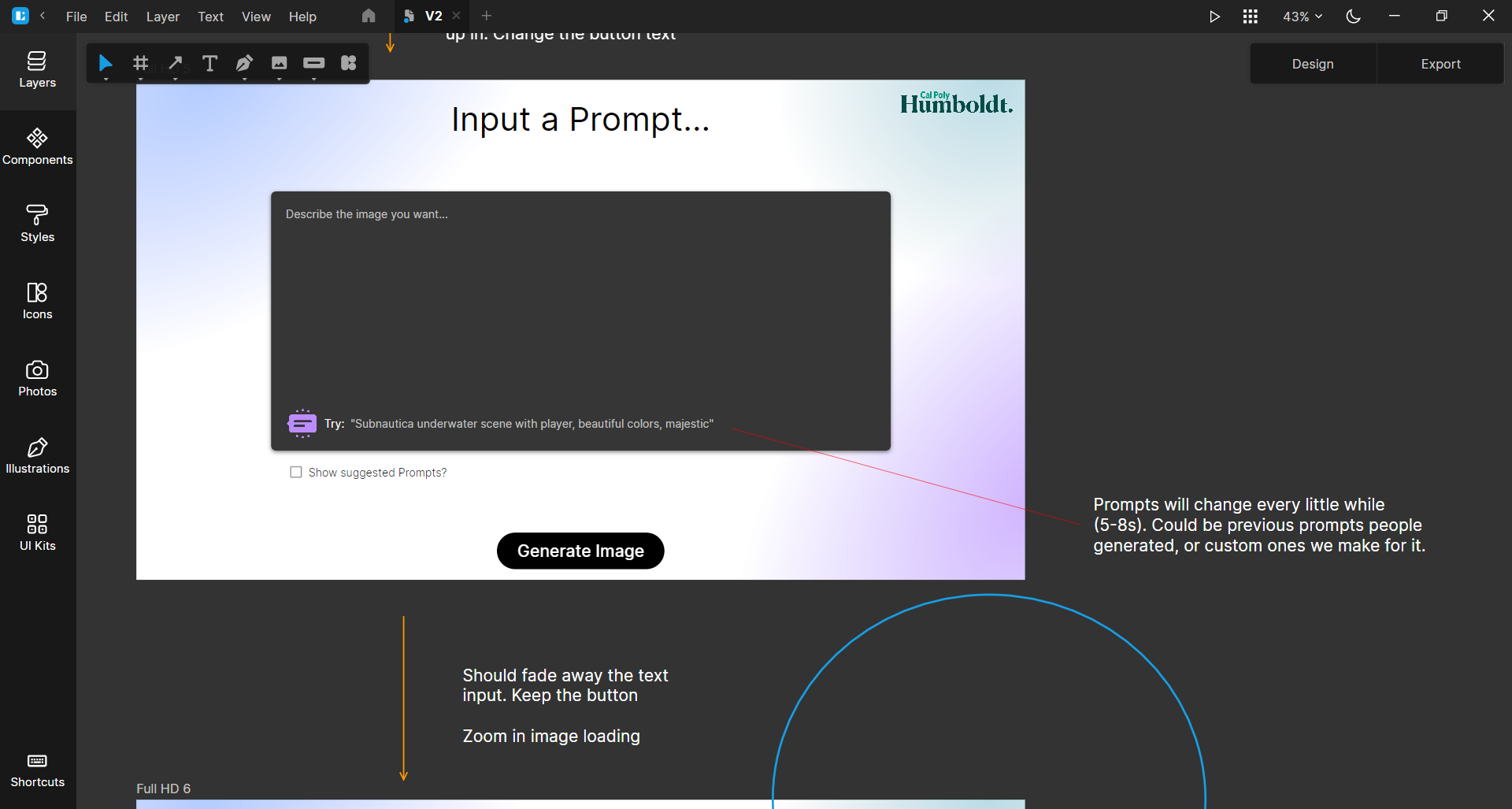

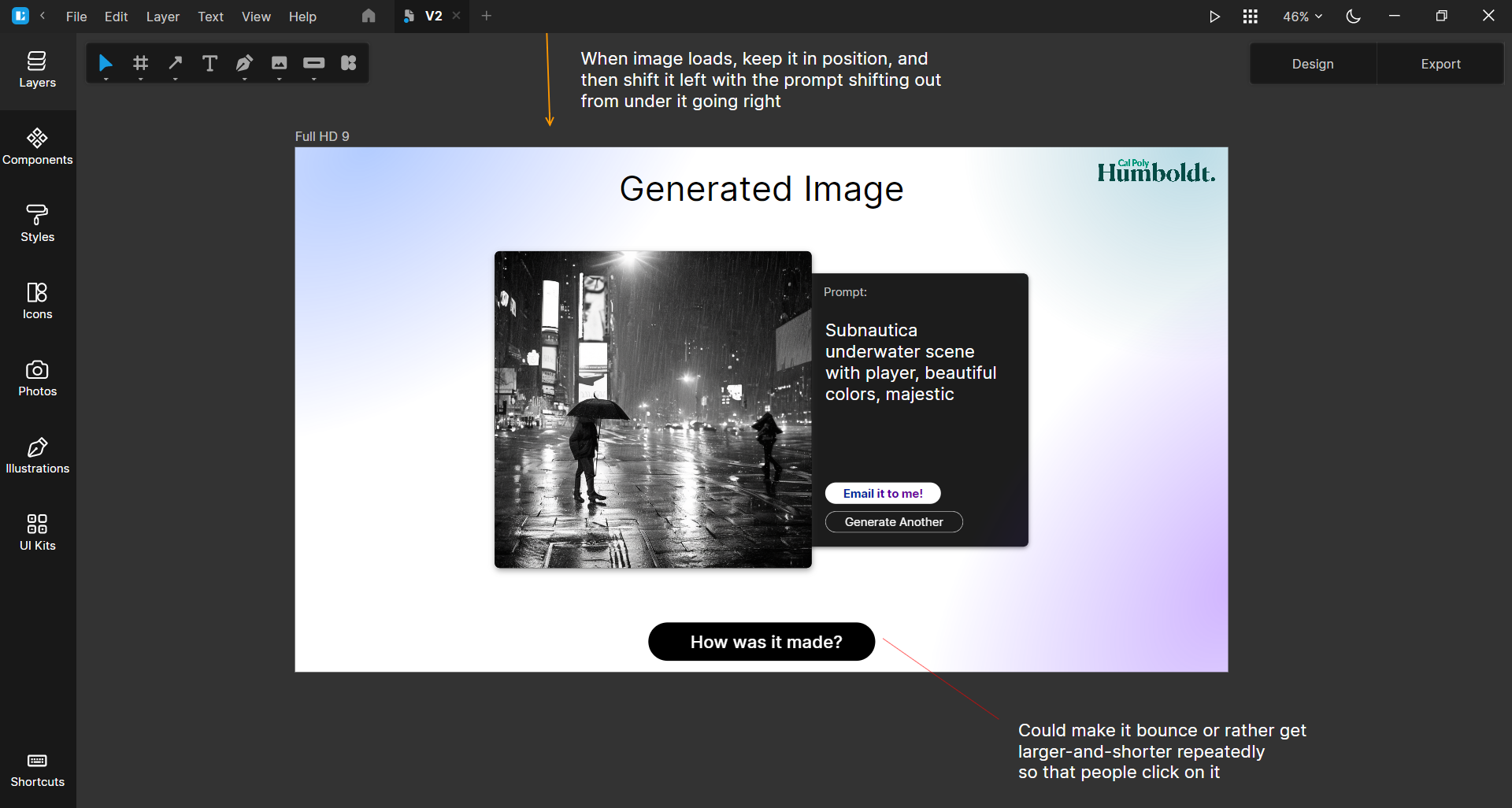

The webpage was constructed using vanila JavaScript, HTML, and CSS. The reason being that the webpage was simple enough that I didn't need a framework. It was designed in a software called Lunacy, and below is a set of images showing the initial design images.

Creating an image-generation API

I needed to create an API that could generate images using Stable Diffusion. I used the free hosting on Cloudflare AI workers, which is actually well packaged and not hard to use. But creating an API which has error handling, correct HTTP responce codes, and is secure is a little more difficult. In particular, I struggled and then learned a lot about headers and CORES policies for APIs.

Below is the API code on cloudflare, if your curious:

Cloudflare worker API code:

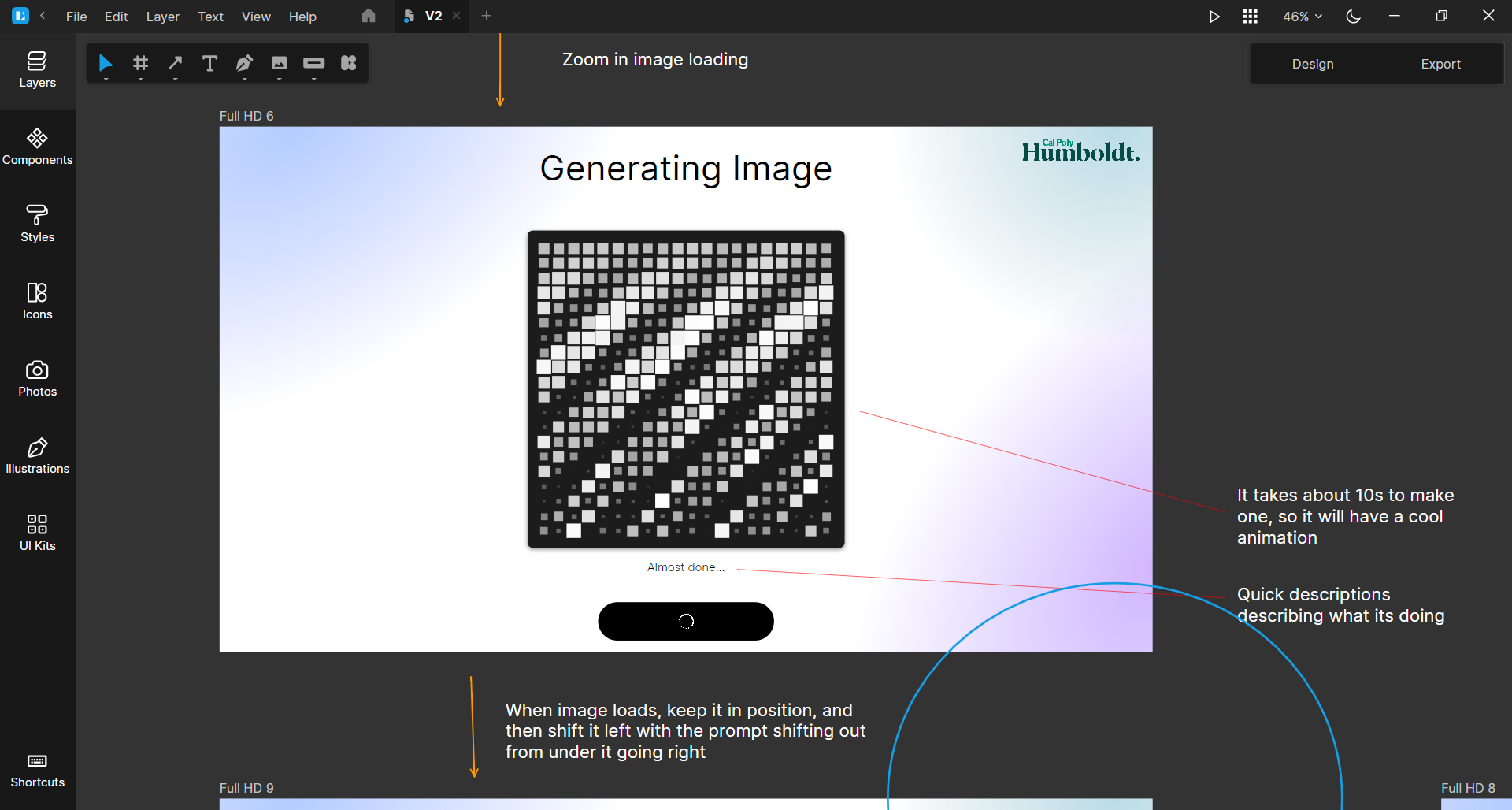

Dealing with image generation delay

One of the challenges I faced was dealing with the delay in image generation. I am using the free stable diffusion hosting on Cloudflare AI workers, so I am at the mercy of how much time cloudflare takes to compute. Ususally the delay is between 10-12 seconds. So I added some delay before showing the loader, so it can look like its running for only about 7-9 seconds.

- I made the slide-out animation for the prompt section run for 1 second.

- I made the fade-in animations for the loader be 1.5 seconds long.

- As well, I added a 1 second delay from when someone clicks "Generate Image" and where it plays the loading animations. Now, a slight delay is needed to make sure the API request was sent correctly, but it doesn't need to be longer than about 0.2 seconds for functionality.

The delay's aren't very noticable for the user, but they make the generation feel quicker and smoother because of the nice animations.